New Voices RGU Student Series 2024 – Hannah Khan

Category: Blog, New Voices, RGU Student Series 2024

In the 2024 Student Series for the New Voices blog, CILIPS Students & New Professionals Community will be sharing the views of Robert Gordon University students from the MSc in Information and Library Studies.

With special thanks to Dr Konstantina Martzoukou, Teaching Excellence Fellow and Associate Professor, for organising these thought-provoking contributions.

Today’s blog post author is Hannah Khan. As a Masters student in Information and Library Science, Hannah examines the intersection of Generative AI (Gen-Ai) and Fake News, shedding light on the challenges and implications for society. In her spare time, she enjoys delving into Imperial history and immersing herself in books – with a particular fondness for Thriller.

What is the role of information professionals in supporting information literacy skills development for using generative artificial intelligence tools?

Generative Artificial Intelligence (GenAI) is a computational technology which relies on petabytes of human generated data as fuel (TAULLI, 2023) allowing it to mimic the creation of unimodal and multimodal models such as text, images, video, speeches, music and code (FEUERRIEGEL et al., 2023) in a way that is human-like in reasoning, learning, planning and creativity (SANTOS, 2023).

Software engineer Blake Lemoine has even gone to claim that AI technologies are conscious with the capacity to feel emotions such as joy and suffering although after disclosing this to the public, he was subsequently fired from his Google post for breaching the “companies confidentiality policy” (BBC, 2022, HARRISON, 2023).

The following table exemplifies the evolving nature of GenAI tools, highlighting their various performance functions and showcasing examples of systems available for use.

Output Modality Systems

Text Conversational agents/search engines: ChatGPT, YouChat

Image/Video Image/video generation and bots: Runaway, Midjourney

Audio – Speech/Music Speech generation: ElevenLabs

Code Programming code generation: GitHub Copilot

Table 1: Types of GenAI systems available for the creation of various modes (adapted from FEUERRIEGEL et al., 2023 p.3).

There are however sceptics of GenAI, and for good reason.

Concern of bias stems from the quality and accuracy of the data inputted by the scientist (FEUERRIEGEL et al., 2023). Often these datasets include unfair presupposed dispositions about age/gender/race or sexual orientation with outputs of GenAI systems amplifying and reinforcing such narratives as global truth (HOWARD and BORENSTEIN, 2017).

Using the free text-to-image AI software ‘Stable Diffusion’, analysts at Bloomberg revealed the physical representations of textual prompts finding that men with lighter skin tones were associated with high-paying jobs ‘Doctor’, ‘Judge’ and darker skin overrepresented low-paying jobs (NICOLETTI and BASS, 2023). 70% of the results for ‘fast-food worker’ were dark skinned, contrary to 70% of US fast-food workers being white and when inserting ‘terrorist’, stereotypical images of Muslim men with dark beards and head-coverings were produced (NICOLETTI and BASS, 2023).

Such results are a reflection of the way in which users propagate information online (ZAJKO, 2022).

The influx of social media, smartphones and the internet has influenced the way in which information is spread and the advancement of GenAI has only amplified the spread of disinformation, misinformation and mal-information bringing about the discussion of fake news (SANTOS, 2023).

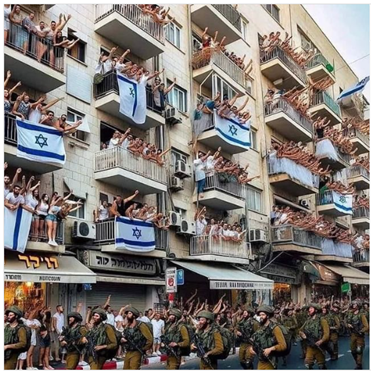

For instance, an image of a burnt Israeli baby posted by Ben Shapiro [a pundit with 6.3 million followers on Twitter] was subsequently deleted after claims it had been artificially generated (THE NEW ARAB, 2023). It is still unclear as to whether the image was fictitious due to inaccurate detection systems (LIGOT, 2023).

The current warfare in Gaza has intensified the distribution of deepfakes for propaganda and political gain (see BEDINGFIELD, 2023; HSU and THOMPSON, 2023) increasing the fog between what is truth and false in a matter of human suffering.

Fake news is also spread through the use of bots (software controlled social media accounts) which manipulate the public (SHU et al., 2020). For instance, during the global pandemic, false connections were made between 5G and Covid-19 and deceitful stories were spread about hospitals filled with mannequins (IMPERVA, 2023).

With nearly 66% of the world’s population using the internet (UNESCO, 2023) and 90% of online content estimated to be AI generated by 2026 (SCHICK, 2020), the need to make informed and evaluative judgements has never been greater.

Information Literacy is a life-long holistic learning process and an empowering universal right, allowing us to understand the world around us by navigating appropriately, creating an ethical digital footprint and critically interpreting information, thereby reinforcing democracy and active participation in society (CILIP, 2018).

Information Professionals do not believe in resisting Ai, rather strive to regulate its misuse (POOLE, 2023).

The Core Model emphasises a set of principles on how IPs can provide support in developing IL (SCONUL, 2011):

- Identify: Understand that new information is constantly being produced and reproduced including published/unpublished data.

- Scope: Various formats/sources are available and the services to access them.

- Plan: Using various search techniques, playing with tools, revising keywords and understanding the value of controlled vocabularies and taxonomies.

- Gather: Understanding the elements of citations, reading abstracts, choosing between free/paid resources and making sure information collected is up-to-date.

- Evaluate: Thinking about relevance, publisher/author reputation, quality, bias and credibility.

- Manage: Being honest in information dissemination, curating ethically, keeping systematic records.

- Present: Understanding the difference between synthesising, summarising and attribution.

The above principles can be expanded to the context of Gen-Ai, guiding users to interact with generated content in a responsible and ethical way.

Reference list

BBC (2022). Blake Lemoine: Google fires engineer who said AI tech has feelings. BBC News. [online] 23 Jul. Available at: www.bbc.co.uk/news/technology-62275326.

Bedingfield, W. (2023). Generative AI Is Playing a Surprising Role in Israel-Hamas Disinformation. [online] Wired UK. Available at: www.wired.co.uk/article/israel-hamas-war-generative-artificial-intelligence-disinformation [Accessed 8 Nov. 2023].

CILIP (2018). CILIP Definition of Information Literacy. Information Literacy Group.

De Paor, S. and Heravi, B. (2020). Information literacy and fake news: How the field of librarianship can help combat the epidemic of fake news. The Journal of Academic Librarianship, [online] 46(5), p.102218. https://doi.org/10.1016/j.acalib.2020.102218.

Europol (2022). Facing reality? Law enforcement and the challenge of deepfakes, an observatory report from the Europol Innovation Lab. [online] Europol. Luxembourg: Publications Office of the European Union. Available at: www.europol.europa.eu [Accessed 8 Nov. 2023].

Feuerriegel, S., Hartmann, J., Janiesch, C. and Zschech, P. (2023). Generative AI. Business & Information Systems Engineering, 66(1), pp.111–126. https://doi.org/10.1007/s12599-023-00834-7.

Harrison, M. (2023). We Interviewed the Engineer Google Fired for Saying Its AI Had Come to Life. [online] Futurism. Available at: https://futurism.com/blake-lemoine-google-interview.

Howard, A. and Borenstein, J. (2017). The Ugly Truth About Ourselves and Our Robot Creations: The Problem of Bias and Social Inequity. Science and Engineering Ethics, 24(5), pp.1521–1536. https://doi.org/10.1007/s11948-017-9975-2.

Hsu, T. and Thompson, S.A. (2023). A.I. Muddies Israel-Hamas War in Unexpected Way. The New York Times. [online] 28 Oct. Available at: www.nytimes.com/2023/10/28/business/media/ai-muddies-israel-hamas-war-in-unexpected-way.html.

Imperva (2023). Imperva Bad Bot Report.

Ligot, D. (2023). Ben Shapiro Controversy Reveals Potential AI Image Manipulation in Sensitive Contexts. [online] www.linkedin.com. Available at: www.linkedin.com/pulse/ben-shapiro-controversy-reveals-potential-ai-image-sensitive-ligot [Accessed 8 Nov. 2023].

Nicoletti, L. and Bass, D. (2023). Humans Are Biased. Generative AI Is Even Worse. Bloomberg. [online] Available at: www.bloomberg.com/graphics/2023-generative-ai-bias.

Poole, N. (2023). Leading responsible AI – the role of librarians and information professionals. [PowerPoint Presentation]. [online] www.slideshare.net. Available at: www.slideshare.net/nickpoole/leading-responsible-ai-the-role-of-librarians-and-information-professionals [Accessed 12 Nov. 2023].

Santos, F.C.C. (2023). Artificial Intelligence in Automated Detection of Disinformation: a Thematic Analysis. Journalism and Media, 4(2), pp.679–687. https://doi.org/10.3390/journalmedia4020043.

Schick, N. (2020). Deep Fakes and the Infocalypse : What You Urgently Need To Know. London, UK: Conran Octopus.

SCONUL (2011). The seven pillars of information literacy. SCONUL Working Group.

Shu, K., Wang, S., Lee, D. and Liu, H. (2020). Disinformation, misinformation, and fake news in social media : emerging research challenges and opportunities. Cham: Springer.

Taulli, T. (2023). Generative AI. Apress.

The New Arab (2023). Ben Shapiro slammed for sharing ‘AI image of Israeli baby’. [online] https://www.newarab.com/. Available at: www.newarab.com/news/ben-shapiro-slammed-sharing-ai-image-israeli-baby.

UNESCO (2021). Media and information literate citizens. [online] Unesco.org. Available at: www.unesco.org/en/articles/media-and-information-literate-citizens [Accessed 11 Nov. 2023].

UNESCO (2023). Explore data, facts and figures. [online] Unesco.org. Available at: www.unesco.org/en/communication-information/data-center [Accessed 11 Nov. 2023].

Zajko, M. (2022). Artificial intelligence, algorithms, and social inequality: Sociological contributions to contemporary debates. Sociology Compass, 16(3). https://doi.org/10.1111/soc4.12962.